person trust system

'what you believe' as downstream of 'who you trust'

tl;dr: this doc introduces the idea of a person trust system. who we trust, what we trust and when we trust is a key factor in knowledge quality — this paper sketches how this general system works. i use concepts from social systems and ashby’s law of requisite variety to explore how social systems balance variety and order to find truth. i claim social systems naturally limit and filter information through word and data structure grounded rules, and true expertise comes from people who can pull together knowledge from different fields while staying independent enough to integrate information at more fundamental levels of abstraction. the doc critiques today’s knowledge systems for becoming overly fragmented, making it harder to integrate ideas deeply. i highlight "interfaces" as crucial for connecting knowledge across disciplines and point out there’s little incentive to take on these roles. i comment on the possibility of quantifying the social costs of pursuing truth, showing how challenging dominant paradigms can be costly but often reflects higher-order thinking.

‘computer science is no more about computers than astronomy is about telescopes.’ — edsger dijkstra

‘academia is to knowledge what prostitution is to love; close enough on the surface but, to the nonsucker, not exactly the same thing’ ― nassim nicholas taleb

‘most of the wonderful places in the world were not made by architects but by the people.’ — christopher alexander

‘every single field always turns out to be like ten concepts that you eventually have to understand.’ — unknown

‘quit trusting authority, and you are alone on a black sea in a black night. the truth is out there. but you have not a thousandth of the time, money, or mind you would need to find it on your own.’

‘here is why you do not understand reality — it is complex — here is where our best thinkers are: when the mainstream is four orders of magnitude away from reality, three orders of magnitude looks like a truth-teller, two orders of magnitude looks like a prophet, and one is practically athanasius contra mundum.’ — curtis yarvin

‘the great discovery of the 20th century wasn’t atomic power. it was the power of cliques. a few people in positions of power sticking with each other is the most powerful force in the universe. they can make lies become truth. they can make toilets be sold as art, they can make women be combat soldiers. they can do anything.’

‘magisterium. stephen gould’s term for a domain where some community or field has authority. gould claimed that science and religion were separate and non-overlapping magisteria. on his view, religion has authority to answer questions of "ultimate meaning and moral value" (but not empirical fact) and science has authority to answer questions of empirical fact (but not meaning or value).'

‘broadly integrative thinking is relegated to cocktail parties. in academic life, in bureaucracies, and elsewhere, the task of integration is insufficiently respected. yet anyone at the top of an organization ... has to make decisions as if all aspects of a situation, along with the interaction among those aspects, were being taken into account. is it reasonable for the leader, reaching down into the organization for help, to encounter specialists and for integrative thinking to take place only when he or she makes the final intuitive judgements?”– dominic cummings

‘akerlof takes used car sales as an example, splitting the market into good used cars and bad used cars (bad cars are called "lemons"). if there's no way to distinguish between good cars and lemons, good cars and lemons will sell for the same price. since buyers can't distinguish between good cars and bad cars, the price they're willing to pay is based on the quality of the average in the market. since owners know if their car is a lemon or not, owners of non-lemons won't sell because the average price is driven down by the existence of lemons. this results in a feedback loop which causes lemons to be the only thing available.’ — ‘the market for 'lemons': quality uncertainty and the market mechanism

‘if you look at the social incentives behind the idea of a genius; structures on top of structures on top of structures incentivize us away from being able to see this level of improvement: human intelligence is structurally incentivized to create faux intelligence scarcities; high iq individuals protect the purity of their intelligence; countless industries (ex. finance, pharma and law) profit from language asymmetries, and large scale collective incentives create an invisible dismissal towards the fact that the average individual can make huge leaps in intelligence through breaking down knowledge and assimilating it to the individual's collection of models. this sorta, biological determinism, the argument that human nature or human characteristics arise as an inevitable consequence of biological characteristics.’ — michael nielsen

‘given the choice, i’d hire sherlock holmes—the antiquated sherlock holmes, from the 19th century, a man for whom “technology” means the light bulb—over any present-day mit engineering phd. in some cases, technical skills can even be counterproductive, for the same reason that upper-body strength can actually make inexperienced rock climbers worse. if beginners can muscle their way through simple climbing routes, they don’t develop the technique necessary to complete harder ones. analysts who are technically and statistically proficient tend to do the same, defaulting to overpowered solutions over more elegant—and ultimately more useful—ones.' — benn stancil

‘i truly believe that it was rational to resort to prayers in place of doctors: consider the track record. the risk of death effectively increased after a visit to the doctor. sadly, this continued well into our era: the break-even did not come until early in the 20th century. which effectively means that going to the priest, to lourdes, fatima, or (in syria), saydnaya, aside from the mental benefits, provided a protection against the risks of exposure to the expert problem. religion was at least neutral – and it could only be beneficial if it got you away from the doctor. the easy part is to show that religion was superior to science. it is hard to accept it: religion protects you from bad science.’ — nassim taleb

‘the last project that i worked on with richard [feynman] was in simulated evolution. ... when i got back to boston i went to the library and discovered a book by kimura on the subject, and much to my disappointment, all of our "discoveries" were covered in the first few pages. when i called back and told richard what i had found, he was elated. "hey, we got it right!" he said. "not bad for amateurs." in retrospect i realize that in almost everything that we worked on together, we were both amateurs. in digital physics, neural networks, even parallel computing, we never really knew what we were doing. but the things that we studied were so new that no one else knew exactly what they were doing either. it was amateurs who made the progress.’ — w. daniel hillis: richard feynman and the connection machine

‘similarly, his conception of a new spiritual elite untarnished by the posturing of the “obrazovanshchiki” echoed the distinctions made by the same three contributors (as well as a.s. izgoev in this instance) between the intelligentsia as a mass phenomenon and the existence of genuine thinkers who, more often than not, were despised by the intelligentsia as socially and politically irrelevant. (it would be better if we declared the word “intelligentsia” – so long misconstrued and deformed – dead for the time being. of course, russia will be unable to manage without a substitute for the intelligentsia, but the new word will be formed not from “understand” or “know”, but from something spiritual. the first tiny minority who set out to force their way through the tight holes of the filter will of their own accord find some new definition of themselves, either while they are still in the filter, or when they have come out the other side and recognize themselves and each other. it is there that the word will be recognized, it will be born of the very process of passing through. or else the remaining majority, without resorting to a new terminology, will simply call them the righteous. it would not be inaccurate to call them for the moment a sacrificial elite. [...]. it is of the lone individuals who pass through (or perish on the way) that this elite to crystallize the people will be composed.)’

a person trust system is exactly what it sounds like: a conceptual space for understanding the system that informs the decision of who, what group and what era to trust and for what.

the core assumption of the person trust system: trust is the control variable of knowledge quality.

a person trust system acts as a foundational variable—a "dabing" (a metaphorical dial that, when turned slightly, can completely rewrite a conceptual model)—of knowledge quality. knowledge is a product of the extent to which one can trust another person/system and the information they share. trust determines whether one believes their neighbor (or social system), which neighbor can be trusted, and to what extent the information provided is credible.

this is not to say that knowledge doesn’t come from empirical validation, logical reasoning, repeatable observations, scientific principles, and so forth. rather, it is to say that, for more knowledge than we care to admit, other factors—summarized as the conceptual space known as the person trust system—serve as the control variable.

related is (1) a person trust system is often a good summary of someone's beliefs. (2) the person trust system is as much about an "era trust system" and an "industry or institution trust system.”

the connection between trust and coordination

the person trust system is downstream of our general theory of coordination, which states:

life, as both a status and an attribute, is a function of ashby’s law of requisite variety.

the less variety a system has in its regulators, the less life that system can sustain.

operationalizing this concept for social and knowledge systems, ashby’s law can be framed in terms of information attractors (what draws people/information in) and information integrators (what enables people to process and connect ideas). put simply, a knowledge system thrives only if it equips its members with tools to access, process, and attract and integrate the right information.

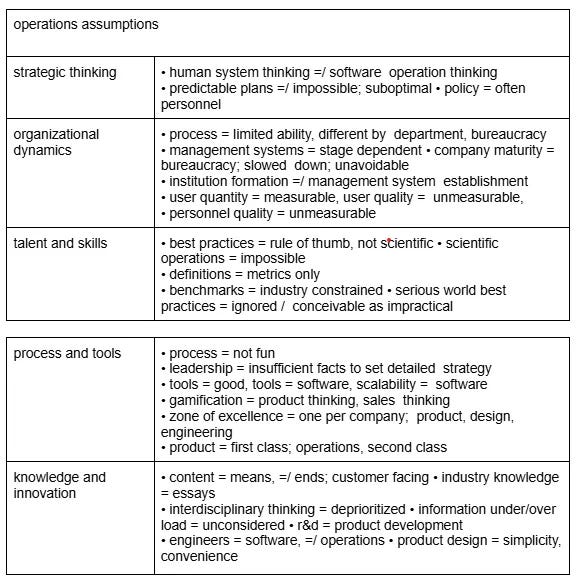

social systems are by definition exclusive. membership in a social system requires limiting one’s degrees of freedom—restricting which information can be accepted and how time is spent within the system. this can be operationalized via what words (which includes people; this person = highly trusted, that person = not). as an example of rules within a social system, see the below rules of the saas movement, as i describe in american business logic.

breaking the above rules has different cost, dependent on the player, the circumstances and the general degree of tactfulness. the general insight, however, is that the rules live at the word level. this includes the extent to which other words, words that are not shown above, are included or excluded.

what we know is that across all knowledge/social systems, there is an equivalent of a terminology acceptance model. a terminology acceptance model (tam) is a conceptual framework that focuses on the acceptance and adoption of terminology (which of course can include names of people and institution) or specialized language within a specific knowledge/social systems. this is where/how rules are encoded. the real life example of this is muted words on twitter which allow users to filter out specific terms, phrases, or hashtags from their timeline, notifications, and search results. just as muted words filter certain content on twitter, social groups or professional environments impose informal boundaries on the types of discussions that are considered valid.

for instance, in a scientific group, introducing a concept like "satan" into a serious discussion would likely loses serious points. referencing feyman or douglas engelbart, albeit to a limited extent, adds points within tech. this is similar to muting/promoting a word in the sense that the group collectively avoids or excludes topics, terms, or concepts that fall outside the accepted framework for discussion, thereby maintaining the integrity and focus of the discourse.

practically speaking, the social system has an unwritten relationship contract between the individual encoded at the level of words and data structures. the exchange of one is the resources, access to system, in exchange for rule following, which inevitably means sacrificing some space for self-information and for engaging with information outside the system. what this results in is what donald hoffman’ calls a "reality interface.”

hoffman’s idea is that our perception of reality is not a direct reflection of the external world but rather a user interface shaped by evolutionary pressures. our sensory experiences and the concepts we create are designed to serve survival and reproductive needs rather than to reveal an objective truth. the brain, in this sense, doesn't create an accurate model of the world but a simplified interface, much like how a computer interface hides the complexities of its underlying processes to make it easier for users to interact with. "meaning" is a fitness-forcing function; the meanings or interpretations we assign to objects, events, and phenomena are those that enhance our fitness in a given environment, helping us to survive, reproduce, and navigate life more effectively, rather than being true representations of the world.

genuine expertise, often arises from individuals with unique spatial-temporal positioning: spatially, those with access to insider information but also enough space with self or with god to think independently and tap into the source (and as mention the source; if an expert references god or some equivalent, the mainstream ideas and then has also strong differing opinions and not well-known references or not common references, we’re on the right track).

life as information integration

life is the physical interface of consciousness, which itself is a function of information integration. the more information integrated, the more life there is. at its apex, god (or the conceptual space for a system that produces life). different levels of information integration are what we describe as paranormal phenomena.

the degree to which an individual integrates with "god"—or with the universal state of onneess, aka maximum amount of things—is directly proportional to their access to truth. this of course assumes a model of the human as a receiver. an illuminate is someone who has received from a higher source; see spiritual drunkeness.

this is not to say that an 'illimunate' is not going to have fundamental conceptual errors that are not subjection to the general phenomena of coordination debt.

‘people who see many connections are often bad at cleaning up their own stuff. people who don't seek connections are often very rigorous and don't do shit their entire life.’ — eric weinstein on terrence howard the key word is amount; there is an inherent size of a world view, a total contextual scale of sorts. people have to different extent fixed/changing worldviews, operationalized as context window size. generally speaking, the larger the size, the more potential variety to deal with complexity…

we are all part of one single consciousness is bigger than the idea that we are not and thus the former can handle things that the latter cannot, more so than vice versa.

the planet is bigger then society, the universe is bigger than the planet and so forth.

if you can't argue for the opposing side, you have a smaller total contextual scale.

similarly questions have a larger total contextual scale (more variety) then answers

assemblages have a larger total contextual scale (more variety) than categories.

the degree to which one selects (which is no different then create) a god that is real, is the extent to which life exists. if the god of an argument between two people is mutual understanding, then more overall will be created across space-time then if the god of an argument is winning. if the god of a company is deadlines above empowering people, then less will be created overall in the long term. if the god of your mental health problems is going to therapy rather then physically taking care of your body, then less will be created in the long term.

the total contextual scale, often a function of distance from god, dictates your variety, which sets the terms by of what information can be integrated.

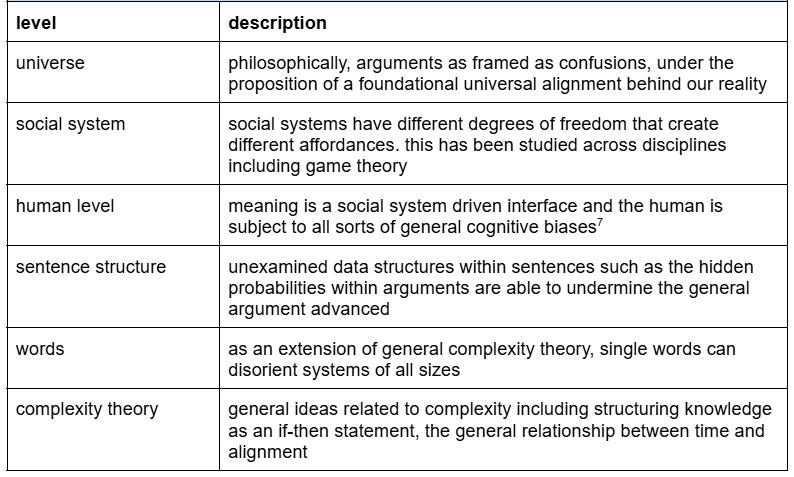

errors arise from misunderstandings in a leaky funnel.

errors arise from misunderstandings in a leaky funnel. a funnel represents the flow of information, modeled as a hierarchy with multiple nodes and bi-directional relationships. this hypergraphical structure shows how information flows between the universe, social/knowledge systems, and the individual.

each level of the funnel represents a conceptual space where confusion or "coordination debt" can occur. for example, in a workplace, poor communication at the team level (a middle layer of the funnel) can lead to missed deadlines at the organizational level.

the funnel can also be viewed as a conversation. conversations, broadly speaking, are interactions between entities—whether words, data structures, or people. for instance, a writer and a reader are having a conversation through the text, while the reader simultaneously interacts with their device and environment.

errors emerge when these interactions "leak" due to misalignment, incomplete data, or miscommunication. for example, a technical manual may confuse its audience if the language assumes prior knowledge that readers lack. this leakage highlights gaps in the funnel that prevent seamless integration of information.

levels of integration and expertise

in accordance the above funnel framework:

a mystic (in its original sense) integrates more information than the illuminated, who integrates more than a genius, who in turn integrates more than someone who is merely excellent.

jesus (the conceptual idea that is), for example, was more integrated than gandhi; gandhi, more than george w. bush, and the neighbor more than george bush.

however, specific context windows matter for our person trust system. for instance, an industry insider may have a degree of variety that exceeds that of a genius within a narrow domain. social systems like mainstream physics or political commentary, though often fragmented and limited in integrative capacity, still provide useful frameworks or classification systems although often built on misguided paradigms.

the disintegration of information systems

as social systems grow more interconnected, individuals become less integrated with themselves or the natural environment and more embedded in artificial systems. over time, this disintegration erodes the ability to connect with "truth" or "god."

the historical trajectory supports this:

in 1900, individuals had far more uninterrupted blocks of time to think deeply than in 1980, 2001, or today.

as time with self becomes rare, philosophers and independent thinkers—away from social systems and often those most capable of integration—have largely disappeared.

the rise of “smart-sounding” over genuinely intelligent discourse reflects this trend. as edgar morin aptly put it: “the progress of knowledge has led to a regression of thought.”

the quote highlights how certain social incentives can lead some individuals to be more authentic or aligned with truth than others.

‘if you have to choose between an ivy league educated lawyer and someone of the same rank who went to pace or st john u., pick the latter without hesitation. simply, an ivy league education can hide incompetence for a very, very long time.’ — taleb

reframing this through the lens of information integration, the pace graduate is exposed to less artificial information (fewer gadgets, less structured discipline, fewer piano lessons, and less "soccer mom" energy). this environment allows for a more natural integration of information, granting greater access to truth. of course, this is a gross oversimplification and depends heavily on the specific context window in question.

trust and complexity in social systems

the critical dabing underlying the general product of knowledge is to the extent to which one can trust thy neighbor and the extent to which one can trust a social system.

a neighbor is metaphorical idea of a non elite average person, presenting a first person account of information. this person is not tightly wound into a knowledge/social system — especially that which is related to the topic the neighbor is discussing. authority, on the other hand, is provided non-direct information, and comes superior organizational capabilities/resources than the neighbortrust in authority

the assumption that we’re going to make here is that the social systems, tasked with defending the truth, often fail because their defense of complexity resemble attempts to block a tsunami with a chair. science as the failed defense of complexity. these systems have produced knowledge structures that are rigid, limiting their ability to process or integrate diverse information. the benefits in organizational capabilities are overshadowed by loss in foundational knowledge errors that result in coordination debt that is a product of initial conditions failure.

interlude for ideas about coordination debt from my piece the risk of risk ‘getting the requirements right is a very difficult task, and therefore a task that is fraught with errors. an error that is caught during requirements development can be fixed for about 10% of the cost associated with an error caught during coding. errors caught during maintenance in the operation of the system cost about 20 times that of an error caught during coding and 200 times the cost of an error caught during requirements development.’ — dennis buede the engineering design of systems ‘we should forget about small efficiencies, say about 97% of the time: premature optimization is the root of all evil. yet we should not pass up our opportunities in that critical 3%.’ — donald kuth father of analysis algorithms ‘consider, for instance, the goal of increasing the domestic supply of computer programmers, or the state of computer literacy in general. this could be easily fixed to some metric, such as the number of graduates in relevant programs, or the measure of prospective applicants to programming jobs. this whole movement around getting kids to code, like lambda school, may just be premature optimization or solving the problem at the wrong place’ as per our tool theory, words are the single biggest contributor to coordination debt. and this risk scales, not linearly, but exponentially. early coordination debts reap vast costs down the line. to illustrate this, consider the example of compounding growth: “a penny doubled every day accumulates more value than half a million doubled every three days, in just over a month." exponential growth is dependent on initial conditions. this applies to both growth as in a company growing and growth as in coordination debt within a social system.

when something big happens in a field like physics or biology, it can break down the basic ideas that other fields rely on. like, a new discovery in physics changes how we think about organizational management, or a big biological breakthrough could flip marketing strategies upside down — and so forth.

every field has its own way of thinking, its own set of terms, and its own methods. for example, business is all about things like marketing strategies, sales funnels, roi, change management, logistics, organizational change, and customer segmentation. all these concepts are based on deeper ideas, but they often struggle to keep up with new, fundamental knowledge or were never linked to fundamental knowledge in the first place and the knowledge systems don’t have the integration capabilities. the integration capabilities are lacking across fields/disciplines — math, philosophy, medicine, science, construction, design, and agriculture are all the same, each one unable to attract and integrate information that closes out coordination debt.

these quotes comment on a social system’s delayed integrators;

‘physics acquired the concept of inertia in 1687, through the principia. but it was 162 years before anyone invented the adjective, and spoke of 'a weight of inertial resistance'. where would science be without that word today, a mere 100 years later? as to the word entropy, the centenary of the birth of which falls in 1965, we are still so unsure of ourselves that there is no official record that the adjective has been coined yet. i have in fact coined it myself, and offered such phrases as 'entropic drift'; but no one seems to want them”. — duane hybertson, model based systems engineering

‘[...] why doctors for centuries imagined that their theories worked when they didn't; why there was a delay of more than two hundred years between the first experiments designed to disprove spontaneous generation and the final triumph of the alternative, the theory that living creatures always come from other living creatures; why there was a delay of two hundred years between the discovery of germs and the triumph of the germ theory of disease; why there was a delay of thirty years between the germ theory of putrefaction and the development of antisepsis; why there was a delay of sixty years between antisepsis and drug therapy.’

‘i call this the "translational problem," inspired by a great paper by ioannidis (my hero) et al., the life cycle of translational research for medical interventions. the paper illustrates how long it takes for an initial scientific discovery to reach practical implementation—and how this cycle is, unfortunately, getting longer. my concern, however, goes beyond the time delay. the fundamental issue is that the gap between knowledge and practice is not easily resolvable because the relationship is not unidirectional. instead of a straightforward progression from knowledge to practice, the arrow often points in the opposite direction—from practice to knowledge.’

‘ecosystem services research faces several challenges stemming from the plurality of interpretations of classifications and terminologies. in this paper we identify two main challenges with current ecosystem services classification systems: i) the inconsistency across concepts, terminology and definitions, and; ii) the mix up of processes and end-state benefits, or flows and assets. although different ecosystem service definitions and interpretations can be valuable for enriching the research landscape, it is necessary to address the existing ambiguity to improve comparability among ecosystem-service-based approaches’

'there is a growing mountain of research. but there is increased evidence that we are being bogged down today as specialization extends. the investigator is staggered by the findings and conclusions of thousands of other workers — conclusions which he cannot find time to grasp, much less to remember, as they appear. yet specialization becomes increasingly necessary for progress, and the effort to bridge between disciplines is correspondingly superficial. — unknown'

biology hasn’t defined life, psychology claims there is no such thing as a soul, science says god isn’t to be taken seriously and has not yet arrived to the idea that what is real is good. systems theory is no longer really asking what is a system.

‘a single change within something fundamental can disorient understandings across systems. in recent decades, systems theory has shifted toward an epistemological focus, similar to a trend in mainstream philosophy. instead of examining the nature of systems themselves (ontology), the focus is now on how we understand, interpret, and model systems (epistemology). in simpler terms historically, both systems theory and philosophy were more concerned with understanding the essence of reality (ontology), asking questions like "what is a system?" or "what is the essence of things?" over time, the focus has shifted to asking "how do we know what a system is?" or "how do we understand and interpret systems?" (epistemology). this epistemological focus has become dominant recently, which some might find surprising or problematic because it takes attention away from the deeper question of what systems truly are at their core. in essence, it's suggesting that we might be overlooking the complexity and unknowns about systems, assuming we understand them fully when, in reality, there may be much more happening beneath the surface that we're unaware of.’ — what is a system, coordination protocols

science runs on a model that says (a) which is tested and falsified, a method of inquiry that relies on the principle of falsifiability to distinguish it from non-science (b) it is the dominant truth (c) our senses are cosmically blind. the coordination debt and pathology of the social system that arises is enormous. if the definition of life has not been formally registered within our general knowledge systems at large, nor has the concern that we lack proper definition architecture to deal with complexity, then the extent to which a given field deviates from reality must be default assumed to be high.

the result is that this exists for like every field…

‘the work of gilles deleuze has much to offer contemporary thinking in psychology. as the papers in this volume show, the restructuring of what are usually taken as ‘#topics’ for psychological analysis into genuine ontological and epistemological concerns leads to a profound questioning of how we think about the nature of ‘the psychological’ and the ways it can be studied. deleuze does not so much provide a new grounding for the discipline, but instead calls into question the very idea of psychology.’

postulate: if a fundamental concept has not been formally defined within a system of knowledge, and if the system lacks the architecture to properly handle complexity, then the extent to which the system deviates from reality must, by default, be assumed to be significant.

what does it mean to formally define a concept? related is this exercept from my post; symbol grounding; the thing that matters

if i ask an employee to ‘try to get x done,’ and his definition of try is like apple juice and my definition of try is like everclear vodka then we are not able to coordinate because the word try is not symbol grounded.

when a system is built on things that are symbol grounded it works. take the nba. what a three pointer is very well symbol grounded. a shot made from beyond the three-point line earns three points. duh. this rule is consistent across all nba games, regardless of location or audience. the symbol is reinforced by visual markers on the court, official rules, and shared understanding among players, coaches, referees, and fans.

symbol grounding postulate: the knowledge dynamics across social systems are not particular to the social system but rather dependent on the degree to which the core ideas have been symbol grounded.

in summary, truth is not a fixed or objective entity but a product of social and historical conditions. different historical periods and societies have their own specific regimes of truth, determining what is considered valid knowledge, acceptable behavior, and legitimate discourse. truth is constructed through power relations and social institutions, not discovered or revealed, with institutions like the media, education systems, scientific communities, and political authorities playing a crucial role in shaping and disseminating it. within each regime, certain knowledge claims and practices are privileged, while others are marginalized. these power dynamics in the production and dissemination of truth have significant implications for social control and authority.1

trust in the neighbor

we’ve laid out some very ideas about the general state of knowledge systems. now the ‘neighbor.’ i cover this more extensively in the paranormal the conspiratorial and the question of competence.

postulate of conceptual detachment: a person may, without personal gain or incentive, share ideas that are perceived as fantastical or extraordinary.

postulate of sameness: multiple other persons, unlinked, also without any personal gain or incentive, may share the same ideas that are perceived as fantastical or extraordinary.

postulate of human integrity: a person exhibiting these ideas may still be perceived as honest, healthy, and trustworthy based on personal interactions and character assessment.

postulate of cognitive divergence: it is possible for individuals to genuinely hold beliefs in extraordinary or unconventional ideas (e.g., fairies, lizard people, multiple axiom deviations from a knowledge system (e.g. aether)) without such beliefs directly conflicting with their social, psychological, or emotional well-being.

postulate of mythological affinity: extraordinary beliefs held by individuals may align with or reflect historical myths, legends, or cultural narratives that have been perpetuated across generations.

postulate of absolute conviction: a person may hold and assert extraordinary beliefs with such intensity and conviction that they swear by them on personal and deeply cherished values, even in the absence of verifiable evidence.

postulate of consistency of personal values: despite the nature of their extraordinary beliefs, such individuals may still act consistently in accordance with moral or ethical standards, displaying qualities of reliability and trustworthiness.

so, you're left with a few possible conclusions: either these people are they are actively deceiving or truthful in spirit or letter with the "letter" as the explicit, detailed interpretation, and "spirit" pertains to the deeper, often more abstract intention behind the letter. if they are truthful in spirit, we are assuming a disparate yet collective delusion.

postulate of a truth of a person: a person left to his own in the most basic sense is more true then a social system. queue the famous nietszche quote; in individuals, insanity is rare; but in groups, parties, nations and epochs, it is the rule.

to make this more practical, let’s take this abridged quote from a joe rogan interview with mycologist paul stamets where he describes a precognition event.

‘the multiverse. i’ve had one or two profound experiences with it—moments where time and reality shifted in ways i can’t fully explain. these moments are so profound that i still can’t wrap my mind around them. it’s tied to psilocybin experiences. i think psilocybin might act as a portal—a one-rule portal, if you will—into the multiverse. i’m starting to sound like terence mckenna, but the idea is that time can bend, and multiple universes can exist simultaneously, layered over one another. that night, there were four of us—all scientists or aspiring scientists, including my brother. we decided to eat the mushrooms. they were less potent than psilocybe cubensis, so we had to consume about 50 mushrooms each to achieve the same effect. we made milkshake-like smoothies to get them down—it was awful, a serious gag reflex challenge. so, i go to bed, and i'm laying there, having a full-blown experience. i can barely sleep because all the colors are keeping me awake, and my mind is racing. then, i have a lucid dream. i wake up and go downstairs. i say, "i had this crazy dream!" they ask, "what was your dream?" i say, "i saw thousands of cattle, dead, baking in the sun." i told them, "i think there’s going to be a nuclear war, but what could kill all these cattle?" this was during the reagan administration, and tensions were really high between the soviet union and the united states. they joked with me, saying, "well, okay, what’s going to happen?" i replied, "i don’t know, but i was in olympia, and i need to rush up to darrington to stay in my cabin because my books and manuscript are up there. i need to save my research." they laughed and said, "well, when’s the world going to end, paul?" and i said, "well, not this weekend." that’s in two days; it’s next weekend. so, they wrote on the calendar, december 1st, and i put it in my book. i think it was 1975, the end of the world. they wrote, "paul predicts the end of the world." we forgot about it. the next week, there were massive rains, huge amounts of snowfall. then, on wednesday and thursday, there was a temperature inversion—it flipped to 75 to 85 degrees. all the snow started to melt, the rivers were flooding, and my little cabin, which was right next to the river, was in danger. that river could swell six feet in a single day just from the snowmelt. i was very close to a volcano with big glaciers. i thought, "oh my gosh, i could lose my manuscript, all my research. i need to get up there." so, i’m watching the news. roads were being closed. i had to take the back way through rockport, washington, to get to my cabin. when i got there, the bank had eroded about 10 feet. my cabin was only 10 to 12 feet away from the river, on the verge of falling in. i grabbed my manuscript, my books, and rescued all the materials i had. but i couldn’t get out because the roads were closed. i had to wait two days before the roads finally opened. when i drove out of the valley into snohomish valley, i went around a bend, and there it was: a brilliant, sunny, warm day. and there, floating in the fields, were hundreds and hundreds of dead cattle. how do you explain that? i think i entered the multiverse. as a scientist, i realize that when you say something like that, you open yourself up to ridicule. (interviewer) do you feel hesitant to communicate these ideas? to a degree, yes. but you know, i’m 62 years old, and at some point, i just don’t care. this is true. this happened to me. i can push the envelope on these ideas because the credibility of my research is well-established. i can save the bees. do you care whether i’ve taken psilocybin mushrooms if i can save your farm, your family, your country, or the world billions of dollars in biosecurity? i care more about that. so, i’m telling you these things—i’m not making them up. i don’t have to. just because you can’t explain it doesn’t mean it’s not true. i think we need to accept that reality is not limited to the perceptions we have traditionally used. that’s the beauty of it.’

does one trust paul in letter and spirit? if we assume him to not be lying, the statistical probability of this story is simply to low to blame it on randomness. i think we can trust paul at the letter. this is a high cost low reward initiation for paul.

the cost of truth

‘some of the cons i suffered for prose’ — jay electronica, universal soldier

‘i have a deep respect for people who are willing to push on issues like this despite the system being aligned against them but, my respect notwithstanding, basically no one is going to do that. a system that requires someone like kyle to take a stand before successful firms will put effort into correctness instead of correctness marketing is going to produce a lot of products that are good at marketing correctness without really having decent correctness properties (such as the data sync product mentioned in this post, whose website repeatedly mentions how reliable and safe the syncing product is despite having a design that is fundamentally broken).’ — dan luu

finally, the pursuit of truth comes with social and professional costs. people like steven greer, who left a medical career, or jean baudrillard, who challenged foucault, paid heavily for their beliefs. similarly, referencing controversial ideas (e.g., flat-earth theories) carries personal risks.

the social cost of truth-seeking is often a function of relationship contracts within systems. where feedback loops are tight and consequences immediate (e.g., driving a car, crashing a plane), truth is rewarded. however, in systems where feedback loops are loose, truth-tellers are often punished, and self-alignment with principles becomes the primary reward. by examining the sacrifices truth-seekers make, one can probabilistically assess the credibility of their claims.

spirit and letter continued

why do people think mark zuckerberg is a lizard? is mark zuckerberg actually a lizard? do people mean this in letter? yes. is mark zuckerberg a lizard in the literal sense? no, i don’t think so. but are they correct in spirit? yes.

if we model a lizard as a cold-blooded creature with limited capacity for empathy, playfulness, or general fluidity of movement — traits that are typically associated with human vitality and expression, especially in childhood — then the spirit makes more sense. it’s not about whether zuckerberg is biologically a reptile, but rather about the perception of his behavior: detached, calculating, and perhaps lacking the warmth and expression that people typically associate with genuine humanity.

i don’t believe in lizard people because i really can’t. i have too many relationship contracts that are voided with this belief. even though i acknowledge that i can imagine a scenario in which i meet disparate people that i trust who swear they have seen them and who i don’t think are subject to cognitive bias or external phenomena.

interfaces as solutions

a major limiting step to integrating information is the absence of effective interfaces—individuals who can communicate across knowledge domains. different fields have distinct paradigms, tools, and mental models, making cross-disciplinary communication inherently challenging. for instance:

designers and engineers often struggle to align their perspectives due to mismatched structures.

managers or interdisciplinary thinkers serve as critical interfaces, facilitating communication and reducing misunderstandings.

such translators are rare but invaluable. the incentives for translators are typically always present; the social systems defend against integration. in rare moments, when someone has the social capital and broad variety of experiences, the individual operates across boundaries, facilitating understanding and reducing misunderstandings. they embody ashby’s law of requisite variety by bridging fragmented systems and leading to information integration. michael levin, the biologist, is a modern example of this role.

foucault spent his career on this; https://michel-foucault.com/key-concepts/